Training Postgraduate Learners in Laceration Repair Using Video Conference Technology

By Stephen K Stacey, DO, Erin F Morcomb, MD, Karen C Cowan, MD, Mark D McEleney, MD, Christopher J Tookey, MD, La Crosse-Mayo Family Medicine Residency, La Crosse, WI

Background

Instructional design for remote procedural skills training has several obstacles1. These obstacles came to the forefront in the early days of social distancing directives due to COVID-19. How do we teach learners procedural skills if we cannot be side-by-side or even in the same room? How does one simultaneously teach learners remotely at home and across several different residencies? Is this teaching method effective, and does it appeal to all levels of learners? Our family medicine residency program developed a remote laceration repair training curriculum aimed at addressing these questions.

Intervention

We held a 2-hour virtual workshop to instruct in laceration repair techniques involving 35 family medicine residents across four individual programs. The training included injection techniques, instrument ties, and the following suture types: simple interrupted, vertical mattress, horizontal mattress, subcuticular, and corner stitch. Learners prepared by completing required readings2,3 and a pretest which assessed cognitive understanding as well as subjective comfort with the procedures. Suture training was done on chicken thighs with skin intact, which was used due to its semblance to human skin and easy availability4. Learners used a tablet, laptop computer, or cell phone and were provided with standard suture supplies (figure 1). Learners practiced these sutures while information and demonstration were delivered via synchronous video teleconference led by faculty, allowing for real-time interaction with the remote learners. The faculty lecturer broadcast a top-down view of the demonstration using ambient lighting and a standard tablet positioned horizontally on a platform with the camera pointed downwards. After the live session was complete, learners completed a posttest similar in content to the pretest to assess for acquisition of knowledge and confidence in each technique. They submitted a video recording and photograph of each suture technique which was graded on a faculty-developed rubric based on similar previously published rubrics5,6.

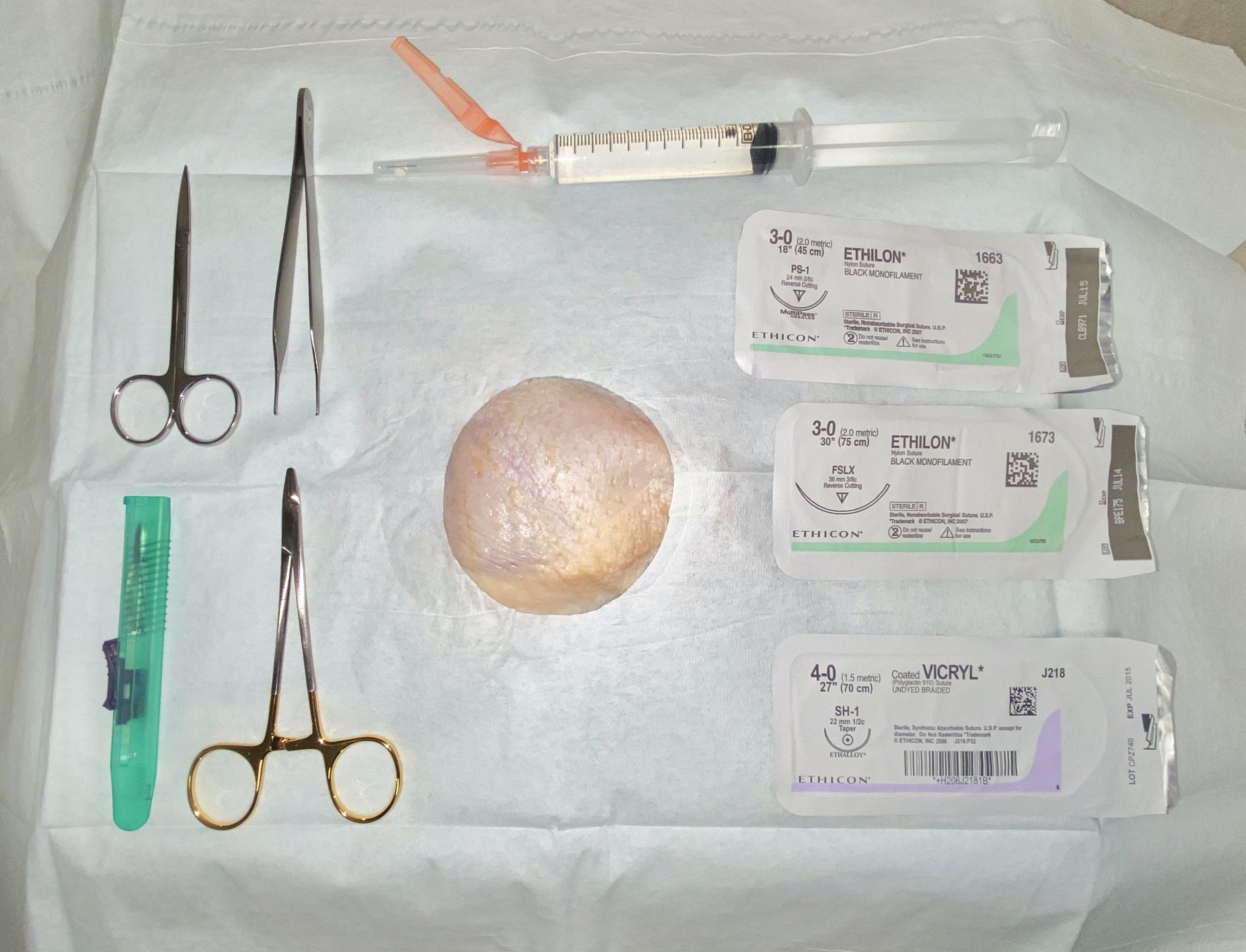

Figure 1

Supplies used for the procedure workshop include a chicken thigh with skin, absorbent pad, fenestrated drape, iris scissors, tissue forceps, needle drivers, scalpel, absorbable and non-absorbable sutures, and a syringe with injection needle. Supplies not pictured include disposable exam gloves, a sharps container, and a tablet or cell phone capable of making video calls.

Results

The learner survey contained two open-ended questions: (1) “How would you rate the training overall?” and (2) “How would you compare this training with prior training events?” Out of 35 learners, we received a total of 67 responses to the two questions (96% completion rate). Of these responses, 38 (57%) were positive, 9 (13%) were negative, and 20 (30%) were neutral or a mixture of positive and negative. Residents were asked to rate their confidence in performing the procedures on a 10-point scale both before and after the training. The difference between pretest scores and posttest scores was compared and a P-value calculated using a two-sample T-test assuming unequal variance (Table 1).

Table 1: Increase in Procedural Confidence of Learners by Postgraduate Year.

|

Post-graduate year (PGY) |

Increase in confidence (10-point scale) |

P-value |

|

PGY-1 |

2.29 |

< 0.001 |

|

PGY-2 |

1.64 |

0.045 |

|

PGY-3 |

1.43 |

0.011 |

Conclusions

Procedural skills training involving preparatory reading with synchronous video demonstration conveys a learning experience that is both effective and rated highly by participants at all stages of residency training. While this was not specifically assessed, we expect that all residents have had prior exposure to suturing. We hypothesized that residents those with more prior exposure would report lower average improvement. Taking PGY year as a proxy for experience, this seems to be the case. This remote training showed that learners from many different residencies are able to participate simultaneously from dozens of locations.

Further investigations could assess expanding real-time feedback by having more faculty members present to cycle through the video feed during the session, or comparing remote learning to similar live training. This protocol is low-cost and easy to implement with commonly available supplies. This method holds potential for situations in which large-group assembly is precluded, resources are scarce, or travel is a limiting factor.

References

- Christensen MD, Oestergaard D, Dieckmann P et al. Learners' Perceptions During Simulation-Based Training: An Interview Study Comparing Remote Versus Locally Facilitated Simulation-Based Training. Simul Healthc. 2018 Oct;13(5):306-315.

- Forsch RT, Little SH, Williams C. Laceration Repair: A Practical Approach. Am Fam Physician. 2017 May 15;95(10):628-636.

- Worster B, Zawora MQ, Hsieh C. Common questions about wound care. Am Fam Physician. 2015 Jan 15;91(2):86-92.

- Denadai R, Saad-Hossne R, Martinhão Souto LR. Simulation-based cutaneous surgical-skill training on a chicken-skin bench model in a medical undergraduate program. Indian J Dermatol. 2013 May;58(3):200-7.

- Williamson JA, Farrell R, Skowron C et al. Evaluation of a method to assess digitally recorded surgical skills of novice veterinary students. Vet Surg. 2018 Apr;47(3):378-384.

- Routt E, Mansouri Y, de Moll EH et al. Teaching the Simple Suture to Medical Students for Long-term Retention of Skill. JAMA Dermatol. 2015 Jul;151(7):761-5.